Lately I have been doing a lot of work on SQL Server’s that have had 24 or more processor cores installed in them. Many of these systems utilize hardware non-uniform memory architectures, or NUMA, while a few of them were not. This led me into a good bit of additional research into the differences between the two configurations. In this blog post, we’ll look at what NUMA is, and why it matters, and then in a separate blog post we’ll look at what this means to SQL Server and the differences between hard and soft NUMA configurations specifically for SQL.

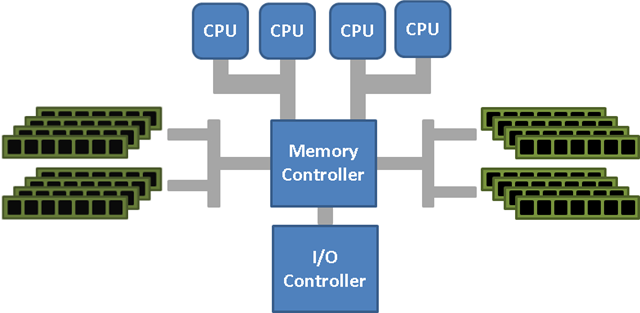

Only a few years ago NUMA was synonymous with specialized hardware configurations that were designed to scale beyond the processor and RAM limitations of traditional Symmetric Multi-Processing (SMP) systems. The first NUMA system I learned anything about was the IBM x3950 series of servers that were used in a PASS Keynote Demo in 2009. The server being used in the demo was actually four x3950 servers that were interconnected as hardware NUMA nodes using the ScaleExpander Option Kit sold by IBM. Each of the hardware NUMA nodes was a very basic 4-way SMP system with its own processors, memory and I/O devices that could have also operated as a stand alone server. In a SMP configuration, all of the processors have equal access to the memory and I/O in the system as shown in the following diagram.

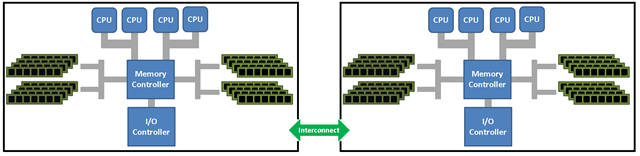

While SMP systems made it possible to scale up the number of processors available inside a single server, as more processors were added to the system the processor bus became a limitation to the overall system performance. In the past systems like the IBM x3950 series of servers were able to scale up the number of processors and amount of RAM available in a single solution by interconnecting multiple SMP systems together with a high speed interconnect that allowed two or more systems to function as if they were a single system. However, one of the problems associated with connecting multiple nodes with an interconnect was the memory access between the processors in one node to the memory in another node was not uniform; hence the name Non-Uniform Memory Architecture. The interconnect between the two systems introduced latency for the memory access across nodes. The traditional concept of NUMA using multiple interconnected SMP systems as NUMA nodes would conceptually resemble the following:

Technically this is more complicated, but the general concept is that each of the nodes has access to the memory available in the total system through the interconnect, but at a slight penalty performance wise. For this reason special considerations had to be taken when writing software to run on NUMA configured systems and the Windows Server OS and SQL Server both have NUMA optimizations built into their code base to optimize memory locality. While in the past most people that were using hardware NUMA configurations would have known about it, recent changes in processor architectures have made hardware NUMA configurations much more common place and most people I’ve talked to don’t even realize that their new server utilizes hardware NUMA.

AMD Hyper-Transport (HT)

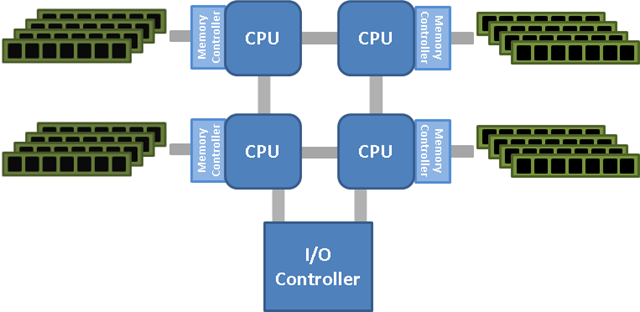

Where the older SMP architecture had a separate memory controller in the Northbridge of the motherboard, newer systems have an integrated memory controller built into the processor itself, and each processor has its own memory bank. The first processors to introduce an integrated memory controller were the AMD Opteron series of processors in early 2003. Since the introduction of the Opteron series, other AMD processor lines have included an integrated memory controller as well. Just as with traditional NUMA configurations, the hardware NUMA created by each processor having an integrated memory controller requires an interconnect to exist between the processors to allow for memory access by the other processors in the system. AMD processors share memory access through Hyper-Transport (HT) links between the processors.

The HT interconnects between processors allows for the remote memory access in one CPU’s memory bank by the processor cores in a different CPU, but there is a cost for accessing this remote data. To determine the most recent copy of the data probes must be sent out to all of the CPU’s to determine if the current CPU has the most recent version of the data, and this increases latency. To combat this, AMD introduced HT Assist in the Istanbul line of processors which reduces the traffic by tracking data in the processor caches to help direct the processors to the correct location of the most recent copy of the data. This reduces the bus traffic and increases the efficiency of the CPU.

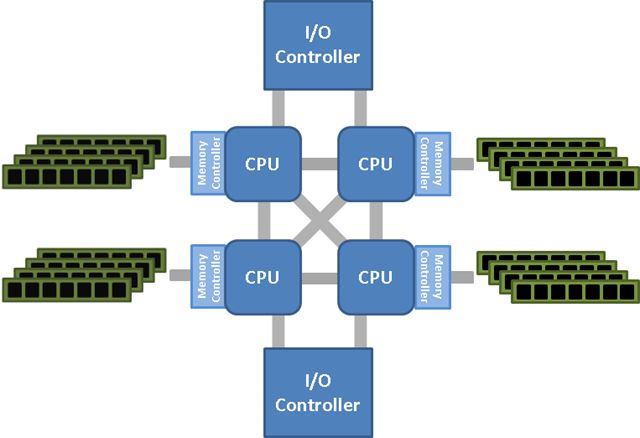

Intel Quick-Path Interconnect (QPI)

As the number of cores on a single processor increased, Intel followed AMD and introduced an integrated memory controller on its Nehalem-EX series of processors that share memory access through Intel’s Quick-Path Interconnect (QPI). The Intel QPI interconnects the processors with each other in a similar manner to the AMD HT interconnect. However, the QPI snoop based cache forwarding implementation can return remote data in as little as 2 hops. (It should be noted that this in no way is an endorsement of Intel over AMD as there may be similar optimizations in AMD processors that I have not yet read about.)

Intel offers a really detailed demo of their QPI architecture on their site that really helps understanding the differences between SMP and NUMA configurations (http://www.intel.com/technology/quickpath/demo/demo.htm).

Node Interleaving

On newer server systems based on processors that have integrated memory controllers, the option still exists to run the server in an SMP like configuration known as Node Interleaving. This option is generally configurable in the system Bios and causes the system to be treated as if it were a traditional SMP system by the Operating System and any applications that are NUMA aware and optimized. When node interleaving is enabled, the system becomes a Sufficiently Uniform Memory Architecture (SUMA) configuration where the memory is broken into 4KB addressable regions and mapped from each of the nodes in a round robin fashion so that the memory address space is distributed across the nodes. When the system is configured for NUMA, the memory from each node is mapped into a single sequential block of memory address space for all of the memory in each node. For certain workloads, node interleaving can be beneficial to performance since the latency between the hardware nodes of a system using processors with integrated memory controllers is small. However, for applications that are NUMA aware and that have NUMA optimizations, node interleaving should remain disabled to allow the application to make optimized use of the hardware resources.

Why does this matter?

I’ve been working with hardware NUMA servers, using the newer processors with integrated memory controllers, for the last two years, and not once did I really have to worry about whether the server was using NUMA or not. Microsoft has done a lot of work in how they built Windows Server and SQL Server to make working with NUMA configurations relatively pain free. However, recently I ran into some issues while working with client systems that made me have to look deeper into NUMA to gain an understanding of how it affected SQL Server, and what options were available for configuring SQL Server for NUMA. I’ll discuss the specifics of these in my next blog post in a few days.

3 thoughts on “Understanding Non-Uniform Memory Access/Architectures (NUMA)”

Very well written post. Waiting for the next related blog post on this.

Jonathan,

thanks for shedding light on this. im waiting for the next ones…Intresting

PS: The link u shared on Intel is awesome very clear explanation…it is bookmarked now:)

There is a dearth of good information out there about SQL Server on NUMA. I look forward to some good posts from you on the topic Jonathan!